Practical Competitive Prediction

| Home | People | Description | Private | On-line Prediction Wiki |

Project Description

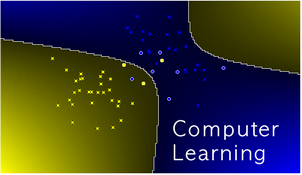

The problem of prediction is central in many areas of science, and various general methods of prediction have been developed in machine learning, statistics, and other areas of applied mathematics. Our research project is in the machine-learning tradition.